Are you looking for the best data ingestion tools to help your business manage and process data more efficiently? With the growing amount of data being generated every day, choosing the right tool to collect, transform, and load your data is crucial. The right data ingestion tool can save you time, reduce errors, and ensure your data flows smoothly into your systems for analysis.

Whether you need to handle real-time streams, integrate data from multiple sources, or automate the process entirely, there are plenty of options to consider. This guide will walk you through some of the top data ingestion tools available today, highlighting their features, strengths, and what makes them stand out, so you can find the perfect fit for your needs.

What is Data Ingestion?

Data ingestion is the process of collecting, importing, and processing data from various sources and loading it into a storage system, database, or data warehouse for analysis and further use. This is an essential step in the data pipeline, ensuring that businesses can collect and prepare data for reporting, machine learning models, or business intelligence.

Data ingestion is a crucial part of modern data architecture, as businesses increasingly rely on data-driven decision-making. The process involves extracting data from disparate sources, such as APIs, databases, files, and real-time data streams, transforming it into a usable format, and then loading it into the system for analysis.

What are Data Ingestion Tools?

Data ingestion tools are software solutions designed to automate and streamline the process of collecting and importing data from various sources. These tools can handle a wide range of data formats and sources, including structured, semi-structured, and unstructured data. Their primary function is to extract data from one or more sources, transform it as needed, and load it into a destination system, such as a data lake, database, or data warehouse.

In addition to simple data extraction, modern ingestion tools often offer built-in capabilities for real-time data streaming, handling large-scale data volumes, and integrating with a wide variety of data sources. Many tools also support advanced features like data transformation, scheduling, error handling, and monitoring to ensure that data flows seamlessly and without interruption.

These tools play a central role in helping businesses ingest the data they need to make real-time or strategic decisions. By using an automated tool, organizations can ensure that data is ingested quickly, accurately, and consistently, reducing the manual effort involved in data collection and preparation.

The Importance of Data Ingestion in Modern Data Architecture

Data ingestion is a key component of any modern data architecture, and its significance cannot be overstated. Below are a few reasons why data ingestion is so important:

- Ensures consistent data flow: Data ingestion tools ensure that the right data is collected from the right sources consistently, creating a reliable data pipeline for ongoing analytics.

- Facilitates real-time analytics: With the growing need for immediate decision-making, real-time data ingestion helps businesses analyze data as it arrives, allowing for faster, data-driven decisions.

- Improves data quality: Proper data ingestion processes include steps like cleaning, transformation, and validation, ensuring that the data entering the system is of high quality and ready for analysis.

- Supports integration across diverse systems: As businesses rely on multiple data sources (CRM systems, databases, APIs, IoT devices), data ingestion tools play a vital role in integrating all those systems into a unified data platform.

- Scalable for future needs: As data volumes increase, scalable data ingestion tools can handle larger datasets and complex structures, ensuring that businesses can continue to grow without running into performance bottlenecks.

Key Features in Data Ingestion Tools

When selecting a data ingestion tool, it’s important to consider the features that will best support your organization’s data needs. Below are some key features that can make a data ingestion tool more effective:

- Real-time data ingestion: The ability to ingest data as it arrives, providing businesses with up-to-date insights.

- Support for multiple data sources: The tool should support a wide variety of data sources, from traditional databases to cloud services, APIs, and IoT devices.

- Scalability: As your business grows and data volumes increase, the tool should scale to meet your needs without compromising performance.

- Data transformation capabilities: Built-in data transformation tools can help clean, aggregate, and format data as it’s ingested.

- Error handling and monitoring: Advanced error handling features to ensure data quality, along with real-time monitoring to track the health of the ingestion process.

- Integration with existing systems: A good tool should seamlessly integrate with your existing data infrastructure, whether that’s a data warehouse, cloud platform, or machine learning pipeline.

- Data security features: Look for tools that provide robust data security, including encryption and access control to ensure sensitive data is protected.

- Automation: The ability to automate the ingestion process to save time and reduce manual effort.

- Data validation: Ensuring the data is accurate and conforms to required standards before being loaded into the target system.

Top Data Ingestion Tools

Selecting the best data ingestion tool for your organization depends on several factors such as your data architecture, the variety of data sources you’re working with, your technical requirements, and your budget. Below, we’ll explore some of the top data ingestion tools that stand out in the industry for their features, scalability, and integration capabilities.

Apache NiFi

Apache NiFi is an open-source tool that provides a robust and flexible solution for automating the flow of data between systems. It’s widely regarded for its ease of use and its ability to support a wide variety of data sources and destinations. NiFi allows businesses to design complex data ingestion pipelines with minimal effort, making it ideal for organizations that need a customizable and scalable solution.

One of NiFi’s standout features is its ability to handle both batch and real-time data ingestion. It offers pre-built processors that help you connect to various data sources, including databases, cloud platforms, IoT devices, and file systems. NiFi also supports data transformation and routing, allowing you to clean, validate, and transform data as it’s ingested, all without needing to write complex code.

For real-time ingestion, NiFi’s low-latency capabilities make it a great fit for industries that require quick data processing and immediate insights. Its user-friendly interface also simplifies the design and monitoring of data flows, making it suitable for both technical and non-technical users.

Fivetran

Fivetran is known for its automated data pipeline solutions, designed to streamline data ingestion by handling the complexities of data integration for businesses. Fivetran automates the extraction, loading, and transformation of data (ELT), offering a seamless connection to a wide range of data sources like databases, applications, and cloud platforms.

One of Fivetran’s major strengths is its extensive library of pre-built connectors. These connectors automatically handle data extraction from sources such as Salesforce, Google Analytics, and various databases, saving your team time and effort. Fivetran takes care of data schema changes, ensuring that your data pipelines continue to function smoothly as source systems evolve.

Additionally, Fivetran provides strong monitoring and logging features, allowing you to track data flows in real time and be alerted to any issues. This tool is perfect for organizations that require quick setup, reliability, and minimal maintenance for data ingestion.

Talend

Talend is a cloud-based data integration platform that offers a comprehensive suite of tools for data ingestion, transformation, and management. Known for its powerful ETL (Extract, Transform, Load) capabilities, Talend provides an enterprise-grade solution for both batch and real-time data ingestion.

Talend supports a broad array of data sources, including cloud services, databases, and applications, making it ideal for organizations with diverse data architectures. With Talend, you can build sophisticated workflows to automate the ingestion process while incorporating data transformation and enrichment. It also offers robust error handling and data quality features, helping ensure that only accurate and relevant data is ingested.

Talend’s open-source nature also makes it appealing for businesses that need flexibility and customization in their data pipeline. For larger enterprises, the platform provides an enterprise version with additional support, security, and scalability options.

AWS Glue

AWS Glue is a fully managed data ingestion and ETL service provided by Amazon Web Services. It’s designed to handle both batch and real-time data ingestion, making it suitable for a wide range of use cases across industries. AWS Glue simplifies the process of preparing data for analysis, offering powerful data transformation features that allow you to clean, catalog, and enrich data during ingestion.

One of the key benefits of AWS Glue is its seamless integration with other AWS services, such as Amazon S3, Redshift, and Athena. This makes it a natural choice for businesses that are already embedded within the AWS ecosystem. AWS Glue uses serverless architecture, which means that you only pay for the resources you use, making it a cost-effective option for organizations of all sizes.

AWS Glue also features built-in data cataloging, allowing you to maintain a centralized repository of metadata, which helps ensure data consistency across your organization. The platform’s flexibility in supporting various data formats and sources further adds to its appeal.

Confluent

Confluent, built around Apache Kafka, is a real-time data streaming platform that enables businesses to ingest and process large streams of data with minimal latency. Confluent is designed for high-throughput data ingestion, making it ideal for companies that need to work with real-time data from a variety of sources, such as IoT devices, logs, or social media streams.

Confluent leverages Kafka’s distributed architecture to provide scalable, fault-tolerant data pipelines that handle massive volumes of data. Its ability to ingest and process data in real time enables businesses to make decisions based on the most current data available. Confluent offers robust support for stream processing and allows you to build custom data transformations as data flows through the system.

Confluent is particularly beneficial for organizations that require low-latency data processing, as it minimizes the time between data ingestion and the generation of actionable insights. It also integrates easily with other data storage solutions like Hadoop and cloud-based platforms, making it an excellent choice for modern, cloud-native architectures.

Google Cloud Dataflow

Google Cloud Dataflow is a fully managed service for stream and batch data processing. It is designed to handle the complexities of data ingestion, transformation, and analysis, providing businesses with the tools they need to build scalable and cost-efficient data pipelines.

Built on Apache Beam, Google Cloud Dataflow allows users to create data pipelines that can process both batch and real-time data in a unified framework. It integrates seamlessly with other Google Cloud products like BigQuery, Cloud Storage, and Pub/Sub, enabling businesses to create end-to-end data workflows in a cloud-native environment.

Google Cloud Dataflow’s ability to scale automatically based on the data load makes it ideal for organizations with fluctuating data volumes. With its support for complex transformations and real-time analytics, Google Cloud Dataflow is a strong contender for businesses looking to leverage cloud-based data ingestion tools.

StreamSets

IBM StreamSets is a data integration platform focused on continuous data ingestion, monitoring, and transformation. It offers a powerful solution for ingesting real-time data from a wide variety of sources, including databases, cloud applications, and streaming platforms.

StreamSets stands out for its ability to provide end-to-end data pipeline monitoring, which allows organizations to track the health and performance of their ingestion processes in real time. The platform’s graphical interface makes it easy to design, manage, and optimize complex data flows. StreamSets also includes built-in data quality controls, ensuring that only accurate and reliable data enters your system.

Designed for scalability, StreamSets can handle high-velocity data streams, making it suitable for industries such as financial services, healthcare, and telecommunications. Its ability to integrate with both on-premises and cloud-based systems makes it a versatile choice for businesses with diverse infrastructure needs.

Informatica PowerCenter

Informatica PowerCenter is a leading data integration tool that provides robust ETL capabilities for data ingestion, transformation, and loading. It’s used by enterprises across various industries for its ability to handle large-scale data ingestion with high efficiency. PowerCenter supports integration with various data sources, including on-premise systems and cloud applications, making it a versatile choice for businesses that need to manage complex data architectures.

One of the tool’s standout features is its advanced data transformation capabilities, allowing businesses to clean, enrich, and validate data during ingestion. PowerCenter’s user-friendly interface enables easy workflow design and monitoring, while its scalability ensures it can handle both batch and real-time data ingestion.

Matillion

Matillion is a cloud-native data integration tool that simplifies the process of data ingestion and transformation. Built for modern cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake, Matillion provides a user-friendly environment to automate data workflows. Its visual interface makes it easy for users to design and execute complex data ingestion pipelines without writing extensive code.

Matillion excels in its ability to perform ETL (Extract, Transform, Load) operations at scale. It also supports batch and real-time data ingestion, making it a flexible option for businesses of all sizes. With pre-built connectors to a wide variety of data sources, Matillion is ideal for companies looking to move data into cloud platforms with minimal effort.

Azure Data Factory

Azure Data Factory (ADF) is Microsoft’s cloud-based data integration service, designed to help businesses automate and orchestrate data ingestion, transformation, and movement across on-premises and cloud environments. ADF supports both batch and real-time data ingestion, allowing users to create powerful data pipelines that can handle large data volumes.

ADF offers an extensive library of connectors to cloud services, databases, APIs, and more. Its native integration with other Azure services such as Azure Databricks and Azure Synapse Analytics provides a seamless experience for businesses operating within the Microsoft ecosystem. The platform is highly scalable and customizable, making it suitable for businesses with diverse and complex data ingestion needs.

MuleSoft

MuleSoft is a leading integration platform that provides tools for automating data ingestion, data integration, and API management. Its Anypoint Platform allows businesses to connect to various data sources, including cloud applications, databases, and on-premise systems. MuleSoft is ideal for organizations that need to ingest and manage data across hybrid environments, such as those that operate both on-premises and in the cloud.

MuleSoft’s API-led approach makes it easy to connect disparate data sources, while its data integration capabilities allow businesses to orchestrate complex data ingestion pipelines. Additionally, MuleSoft offers powerful monitoring and analytics tools, enabling businesses to track and optimize data flows in real time.

SnapLogic

SnapLogic is a cloud-based integration platform that focuses on automating data ingestion and ETL workflows with minimal code. Its AI-powered workflows allow businesses to ingest data from a wide variety of sources, including databases, cloud platforms, and APIs. SnapLogic supports batch and real-time ingestion, providing the flexibility needed for organizations with varying data processing requirements.

One of SnapLogic’s strongest features is its visual interface, which makes it easy to design and manage data pipelines without writing extensive code. It also integrates with machine learning models and artificial intelligence tools, enabling businesses to enhance their data ingestion processes with predictive analytics.

Stitch

Stitch is a cloud-based data integration platform designed to simplify the process of ingesting data into cloud data warehouses. It offers pre-built connectors for a wide variety of data sources, including databases, applications, and cloud storage systems. Stitch is designed for ease of use, enabling businesses to set up and automate data ingestion with minimal configuration.

Stitch offers both batch and real-time data ingestion capabilities, making it a flexible solution for businesses looking to integrate multiple data sources into their data warehouses. It’s known for its simplicity, making it an excellent choice for companies that need a straightforward data ingestion tool without unnecessary complexity.

Apache Kafka

Apache Kafka is a distributed streaming platform designed for high-throughput data ingestion and real-time processing. Kafka is commonly used in systems that require the ingestion of large amounts of real-time data from sources such as logs, sensors, and applications. Kafka’s distributed nature makes it highly scalable, allowing businesses to handle high volumes of data across multiple sources.

Kafka is particularly well-suited for enterprises that need to ingest streaming data with low latency. It also integrates well with other big data tools such as Hadoop and Spark, allowing businesses to build complex data pipelines for advanced analytics and machine learning applications.

DataRobot

DataRobot is a machine learning platform that also includes data ingestion capabilities, particularly focused on automating the data pipeline process. While its primary function is in machine learning and AI model building, DataRobot provides tools for ingesting and processing data, making it a strong choice for organizations that require a combination of data ingestion and predictive analytics.

DataRobot’s platform integrates with a wide range of data sources and offers automatic data preparation features, ensuring that data is clean, consistent, and ready for analysis. Its machine learning integration makes it a great choice for businesses looking to incorporate AI-driven insights into their data ingestion workflows.

DBT (Data Build Tool)

DBT is a popular open-source data transformation tool designed for modern data teams. While DBT focuses primarily on data modeling and transformation, it integrates seamlessly into the data ingestion pipeline by automating the transformation process during ingestion. DBT allows businesses to automate their ETL workflows, enabling the ingestion and transformation of data in real-time.

DBT integrates well with cloud data warehouses like Snowflake, BigQuery, and Redshift, making it an excellent option for organizations that rely on cloud-native solutions. Its powerful data transformation features and support for version control and testing make it an attractive choice for businesses focused on data quality and consistency.

CloverDX

CloverDX is a comprehensive data integration platform that enables businesses to automate data ingestion, transformation, and processing. It offers a visual design environment for creating complex data workflows, allowing users to integrate and transform data from various sources with minimal coding. CloverDX excels in scenarios where data needs to be processed across multiple systems and formats.

The platform is highly flexible, supporting a wide range of data sources and destinations. Its scalability and integration capabilities make it ideal for enterprises that need to build and manage large-scale data pipelines. CloverDX’s strong focus on data quality ensures that only accurate and clean data is ingested into your system, enabling better business insights.

Each of these tools brings unique capabilities to the table, depending on your specific data ingestion needs. Whether you need a simple, automated data pipeline or a more complex, real-time streaming solution, there’s a tool that can support your organization’s data architecture and help you scale effectively.

Types of Data Ingestion

Understanding the different types of data ingestion is essential to selecting the right tool for your organization’s needs. Data ingestion isn’t a one-size-fits-all process—depending on your data type, business needs, and goals, the approach you choose can significantly impact the efficiency and effectiveness of your operations.

Batch vs. Real-Time Ingestion

The first major distinction in data ingestion is between batch and real-time ingestion. The choice between the two primarily depends on how quickly you need to process and analyze the data.

Batch ingestion refers to the process of collecting data in chunks and then loading it into your system at scheduled intervals—be it hourly, daily, or weekly. This approach works well for non-time-sensitive data or processes that do not require instant analysis. For example, if you’re gathering data from transactional systems or monthly reports, batch ingestion is efficient and cost-effective. It also allows for more controlled data processing since the system can process large amounts of data at once during a scheduled window.

On the other hand, real-time ingestion (also called streaming ingestion) handles data as it arrives, allowing you to process and analyze it immediately. This method is ideal for use cases where up-to-the-minute insights are necessary, such as monitoring website traffic, social media feeds, financial transactions, or sensor data from IoT devices. Real-time ingestion helps you stay agile, quickly respond to anomalies, and make decisions based on the freshest data available. It does come with challenges, such as higher infrastructure demands and the need for more robust data management and monitoring tools.

Structured vs. Unstructured Data

Another critical aspect of data ingestion is how your system handles different types of data. Structured data is highly organized and formatted in a way that is easy for systems to process, typically found in relational databases, spreadsheets, or CSV files. This type of data is ideal for ingestion through traditional ETL processes and requires less effort in terms of formatting or transformation before being stored in databases or data warehouses.

On the other hand, unstructured data lacks a predefined format or structure, making it harder to categorize or analyze. This could include text-heavy data such as emails, social media posts, video files, audio files, or logs. Ingesting unstructured data often requires more advanced tools and techniques to parse, analyze, and transform it into a format that’s useful for reporting or analysis. These tools might include natural language processing (NLP) or machine learning algorithms to derive insights from the raw data.

More recently, the ability to handle both structured and unstructured data is becoming a critical feature of modern data ingestion tools. Businesses that rely on diverse data sources—like combining customer records with social media feedback or IoT device sensor data—need a tool that can handle both types seamlessly and combine them into a unified system for analysis.

Data Transformation during Ingestion

As data moves through the ingestion pipeline, it’s often necessary to apply transformations to ensure that the data is in the right format, cleaned, and optimized for storage and analysis. ETL (Extract, Transform, Load) is the traditional method for handling this, but many modern tools are incorporating real-time data transformation (ELT). In the past, data was typically transformed after it was loaded into a destination system (i.e., batch ETL). However, now with the rise of real-time data processing, data transformation can happen as it is ingested into the system (i.e., ELT).

This transformation process can include several tasks, such as data cleaning, which removes duplicates, handles missing values, and corrects inconsistencies. Data normalization may be applied to ensure that all values are consistent and can be analyzed together, while data aggregation might be used to summarize large volumes of data into more digestible formats for reporting. Some tools allow you to create custom transformations specific to your business’s needs, such as merging datasets from different sources, converting formats (e.g., JSON to CSV), or enriching data with additional context (e.g., geolocation data).

Ultimately, whether you’re ingesting data in batch or real time, the goal of the transformation is to ensure that the data entering your system is clean, usable, and ready to fuel insights. While batch ingestion might allow for complex transformations before data is loaded, real-time ingestion often requires more automated, lightweight transformations to minimize latency. Having the right tools in place to manage both the ingestion and transformation process is key to maintaining data integrity and ensuring that your system performs efficiently.

Data Ingestion Tool Features to Look For

Selecting the right data ingestion tool is a critical decision for any business. With so many options available, it’s easy to get overwhelmed. However, by focusing on a few key factors, you can make an informed choice that best aligns with your business goals and technical requirements. Here are the essential considerations to keep in mind when evaluating data ingestion tools.

Scalability and Performance

As your business grows, your data ingestion needs will likely evolve. Whether you’re dealing with a small stream of data or massive datasets, scalability is a crucial factor. A tool that scales easily allows you to increase your data ingestion capacity without having to worry about performance bottlenecks.

Performance is equally important—especially when working with large volumes of data. A tool that offers high throughput and low latency ensures that your data pipeline operates efficiently without causing delays. This becomes especially relevant when processing real-time data, where even slight delays can result in missed opportunities or decisions based on outdated information. When considering scalability, also pay attention to how the tool handles increasing data complexity and variety. A tool that supports both horizontal and vertical scaling is ideal for growing businesses with ever-expanding data ingestion requirements.

Integration Capabilities with Existing Data Ecosystems

Your data ecosystem is likely composed of various tools, databases, and cloud platforms that work together to process and analyze your data. When choosing a data ingestion tool, you must ensure that it integrates seamlessly with your existing systems. This includes both your data storage solutions, such as data lakes or warehouses, and any analytics tools you’re using, like business intelligence platforms or machine learning models.

Good integration capabilities save you time and effort by allowing you to connect your data ingestion tool with minimal manual work. Look for a tool that supports integration with popular data sources, such as relational databases, NoSQL databases, cloud platforms (e.g., AWS, Google Cloud, Azure), APIs, and other external services. Additionally, make sure the tool can handle the complexity of multi-cloud and hybrid cloud environments, as many businesses rely on a combination of on-premise and cloud-based systems. The ease of integration with your existing tools will reduce friction in adopting the new system and speed up time-to-value.

Data Security and Compliance Features

Data security should be a top priority, especially if you handle sensitive information such as personally identifiable data (PII), financial data, or health records. When choosing a data ingestion tool, make sure it has robust security features to protect your data at rest and in transit. Look for encryption, both during the data ingestion process and when data is stored in your systems. Additionally, tools that offer access control features allow you to limit who can view or modify the data, ensuring that only authorized personnel can access sensitive information.

Beyond security, data compliance is another critical aspect to consider. Depending on your industry and the region where your business operates, you may need to comply with regulations like GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act), or HIPAA (Health Insurance Portability and Accountability Act). A data ingestion tool that supports these compliance standards and provides audit trails, data masking, and other compliance features will help you mitigate risks and avoid potential fines or legal issues.

Support for Various Data Sources (APIs, Databases, Streaming, etc.)

One of the main reasons you’ll need a data ingestion tool is to centralize and harmonize data from multiple sources. These sources can vary widely—from APIs and databases to live streaming data and IoT sensors. Your data ingestion tool must support a variety of data types and be flexible enough to handle the specific requirements of each data source.

For example, APIs might require specific authentication methods and rate limiting, while databases could require efficient query processing. Streaming data, on the other hand, may involve handling high-velocity inputs and ensuring low-latency processing. A good tool will seamlessly connect to these diverse sources and handle the challenges each one presents. Furthermore, it should allow you to easily scale as you add new data sources in the future.

Cost and Pricing Structure

While evaluating the functionality and performance of data ingestion tools is essential, it’s equally important to consider their cost. Many tools operate on a subscription model, while others might charge based on the volume of data ingested or the number of users. It’s important to align the tool’s pricing structure with your business’s budget and anticipated usage.

Consider the overall value the tool provides in terms of performance, scalability, and ease of integration. Sometimes, a slightly higher upfront cost may result in long-term savings due to greater efficiency or reduced need for manual intervention. Be sure to account for all hidden costs, such as training, support, or scaling fees, to get a clear picture of the total cost of ownership.

Additionally, some tools offer free trials or tiered pricing, where you can start with basic features and scale up as your data needs grow. If you’re a small or growing business, opting for a tool with a pay-as-you-go pricing model might help you avoid overpaying for features you don’t yet need. Be sure to weigh both the initial costs and the potential scalability of the tool to ensure it remains cost-effective as your business expands.

Data Ingestion Best Practices

Data ingestion is a crucial part of building an efficient and scalable data pipeline. By following best practices, you can ensure that your data ingestion process is smooth, efficient, and ready to scale as your business grows.

- Plan your data flow carefully: Define the sources, transformations, and storage destinations early to avoid bottlenecks or missed opportunities later.

- Implement error handling and data quality checks: Ensure that your tool has mechanisms to validate data, catch errors, and log any discrepancies during the ingestion process.

- Optimize for performance: Streamline your ingestion pipeline to handle large data volumes and real-time processing without affecting system performance.

- Ensure data consistency: Regularly clean and deduplicate data to prevent inconsistencies that could impact downstream analysis and decision-making.

- Automate data ingestion where possible: Minimize manual intervention by setting up automated processes that handle routine data ingestion tasks, saving time and reducing the risk of human error.

- Monitor your data pipeline: Set up real-time monitoring to track the health of your ingestion pipeline and quickly identify and address issues.

- Focus on security and compliance: Encrypt data both in transit and at rest, and make sure your ingestion processes comply with relevant regulations to avoid security breaches and legal complications.

- Test your system with real data: Use real-world data to test your ingestion tool under load to ensure it performs effectively in live environments.

- Document your pipeline setup: Proper documentation ensures that your team can troubleshoot issues, scale systems, and understand how the data flows through your pipeline.

- Keep it flexible: Ensure that your data ingestion pipeline is scalable and adaptable to changing business needs, such as adding new data sources or expanding storage capacity.

Data Ingestion Tools Challenges

While data ingestion tools offer many benefits, the process can come with its own set of challenges. Being aware of these potential roadblocks and knowing how to address them will make the data ingestion process smoother and more efficient.

- Handling large volumes of data: Ingesting vast amounts of data can lead to slow processing times or system crashes. To address this, implement data batching or parallel processing techniques to ensure your tool can handle high data loads efficiently.

- Dealing with diverse data formats: Data from different sources can come in various formats, which can complicate the ingestion process. Use transformation tools that can automatically adjust data formats during ingestion or implement a flexible schema to handle diverse data types.

- Ensuring data quality: Poor-quality data can lead to incorrect analysis and decision-making. Set up data quality checks to clean and validate data before it’s ingested into your system.

- Latency in real-time data ingestion: Real-time data ingestion can be delayed due to network issues or system bottlenecks. To minimize latency, use a tool designed for real-time processing and ensure that your system is optimized for speed.

- Integration issues with legacy systems: Older systems may not be compatible with modern data ingestion tools, making integration a challenge. Use API connectors or build custom integrations to bridge the gap between legacy systems and newer data tools.

- Data security and privacy concerns: Handling sensitive data can put your business at risk if not properly managed. Implement strong encryption methods, access controls, and ensure compliance with data protection regulations such as GDPR.

- Scalability limitations: As data grows, your ingestion system may struggle to keep up. Choose a tool that supports scaling and is capable of handling future growth in both data volume and complexity.

- Monitoring and maintaining the system: It’s easy for data ingestion pipelines to break down without proper monitoring. Set up automated alerts and tracking tools to monitor performance, and schedule regular maintenance to ensure everything runs smoothly.

- Ensuring system compatibility: The complexity of integrating various systems can lead to compatibility issues. Choose an ingestion tool with broad integration capabilities to work with your existing infrastructure.

Conclusion

Choosing the right data ingestion tool is essential to ensuring that your business can efficiently collect, manage, and process the vast amounts of data it needs. The tools mentioned in this guide offer a variety of features designed to meet different business needs, from real-time data processing to cloud-based solutions, all with varying levels of complexity and scalability. It’s important to consider factors like the size of your business, your data requirements, and the existing tools you use when deciding on the best tool for you. No single tool will be the right fit for everyone, but by understanding your needs and evaluating the strengths of each option, you can find a solution that will enhance your data pipeline and support your long-term goals.

Ultimately, the best data ingestion tool will depend on your specific use cases and the resources available to you. Whether you’re looking for something user-friendly with minimal setup, or a robust platform that can handle large-scale data ingestion and transformations, there’s a tool on this list that can help you streamline your processes. By investing time in understanding your options, you’ll be able to improve the efficiency and quality of your data workflows, leading to better decision-making and stronger insights. Take the time to explore the features and integrations of these tools to find one that fits your business’s current and future needs.

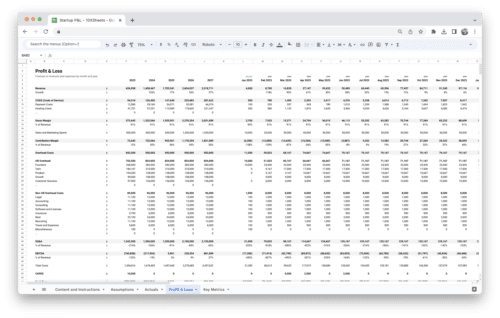

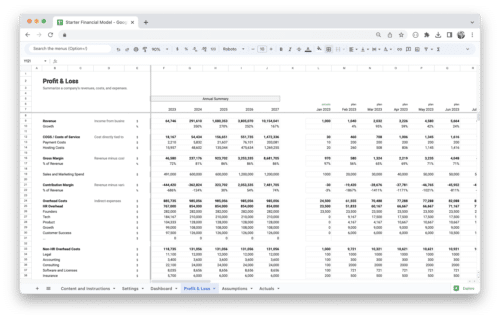

Get Started With a Prebuilt Template!

Looking to streamline your business financial modeling process with a prebuilt customizable template? Say goodbye to the hassle of building a financial model from scratch and get started right away with one of our premium templates.

- Save time with no need to create a financial model from scratch.

- Reduce errors with prebuilt formulas and calculations.

- Customize to your needs by adding/deleting sections and adjusting formulas.

- Automatically calculate key metrics for valuable insights.

- Make informed decisions about your strategy and goals with a clear picture of your business performance and financial health.

-

Sale!

Marketplace Financial Model Template

Original price was: $219.00.$149.00Current price is: $149.00. Add to Cart -

Sale!

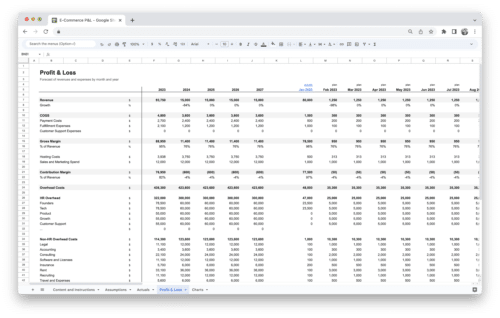

E-Commerce Financial Model Template

Original price was: $219.00.$149.00Current price is: $149.00. Add to Cart -

Sale!

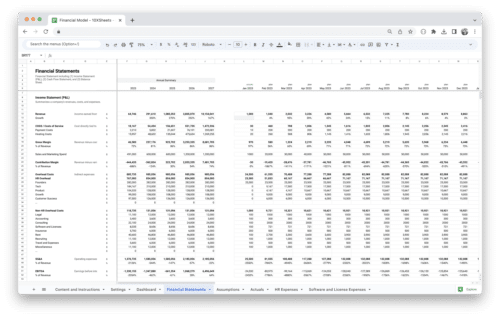

SaaS Financial Model Template

Original price was: $219.00.$149.00Current price is: $149.00. Add to Cart -

Sale!

Standard Financial Model Template

Original price was: $219.00.$149.00Current price is: $149.00. Add to Cart -

Sale!

E-Commerce Profit and Loss Statement

Original price was: $119.00.$79.00Current price is: $79.00. Add to Cart -

Sale!

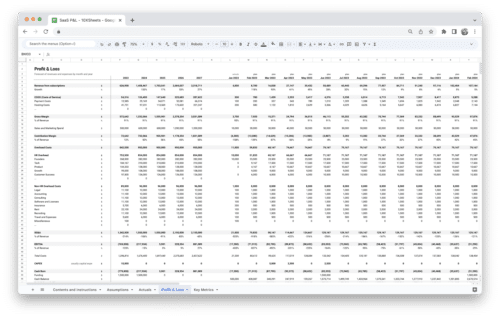

SaaS Profit and Loss Statement

Original price was: $119.00.$79.00Current price is: $79.00. Add to Cart -

Sale!

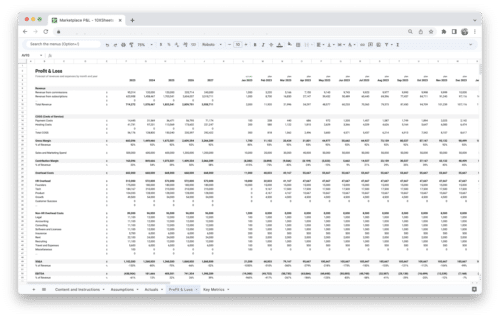

Marketplace Profit and Loss Statement

Original price was: $119.00.$79.00Current price is: $79.00. Add to Cart -

Sale!

Startup Profit and Loss Statement

Original price was: $119.00.$79.00Current price is: $79.00. Add to Cart -

Sale!

Startup Financial Model Template

Original price was: $119.00.$79.00Current price is: $79.00. Add to Cart